The Online Safety Act Has Nothing to Do With Child Safety and Everything to Do With Censorship

From deteriorating youth mental health to the misogynistic radicalisation of boys, the internet is rife with harmful content. Something must be done. It isn’t the UK’s Online Safety Act.

Ostensibly, the act – passed in October 2023 and now being implemented by the communications regulator Ofcom in phases – is designed to protect children from harmful content, including by mandating social media companies to restrict access to online pornography and age-inappropriate content. This requirement went into effect last week, when UK users suddenly found themselves required to verify their age or have it estimated by the platform based on their government-issued IDs, biometrics or behavioural data.

To the unassuming, the age verification sounds reasonable: kids can’t just walk into the corner shop and buy alcohol or porn. Shouldn’t there be similar rules online? Perhaps – but this isn’t what the Online Safety Act does. Upon its implementation, UK users immediately noticed that political content about the genocide in Gaza or the war in Ukraine was blocked, along with discussion forums about sexual assault and hobbyist groups about hamsters. Platforms like Spotify and Wikipedia have also been blocked.

UK users began compiling a list of banned sites, and public outrage over the act led to a petition calling on parliament to repeal the act, which has since received almost half a million signatures. The government response stated that it has “no plans to repeal” the act. The act brought together Nigel Farage and Owen Jones in an unlikely alliance to protect free speech online and repeal the act. Technology secretary Peter Kyle responded by describing the act’s critics as being “on the side of predators [like] Jimmy Saville”.

Far from being a way of protecting children online just as we might do offline, what the act does is more akin to preventing anyone, of any age, from walking into a supermarket, shop, library or even a friend’s house.

It also leaves behind a paper trail of your activities and whereabouts: when an age verification system takes a picture of your driver’s license to authenticate your age, it collects all the information that is available on your ID, including your face, age, birthday, and address. The age verification vendor now has access to information about you that can be used to build consumer profiles, train other models, and sell to the highest bidder. In the offline context, the supermarket clerk may forget who you are after you show your ID; online, the vendor keeps a data trail about you that you cannot control. The result looks more like surveillance than safeguarding.

Proponents of the Online Safety Act claim that they want to protect children from the harms of big tech. In practice, however, it prevents young people from learning, expressing themselves and socialising; creates a pretext for censoring political dissent; and concentrates more power in the hands of the tech industry.

Protecting Zionists, not children.

From its conception, the Online Safety Act had little to do with child safety. It began as the online harms white paper in 2019 and soon became the Online Safety bill, introduced into parliament by two Conservative MPs. For the next four years, the bill received heavy criticism across the political spectrum for its impact on democratic freedoms, technical feasibility and proportionality, with human rights defenders, technology experts and even a parliamentary committee condemning the bill.

The bill has been described as “a blueprint for digital repression” by leading digital rights groups. Civil society highlighted two of its most troubling aspects. First, it introduced the “duty of care” provision, which would subject platform companies to remove vaguely defined “harmful” content. This “legal but harmful” clause joins the ranks of censorship and surveillance laws, often proposed by governments around the world in the aftermath of mass political action (such as, say, millions of people marching through the streets to protest a genocide) to suppress political dissent. The UK is no different.

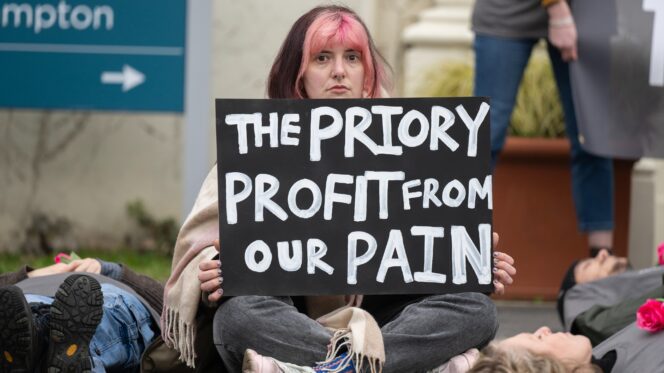

The “legal but harmful” clause was eventually dropped, but the spirit of censorship remains in the act’s “harmful content” language, which gives wide latitude to platform companies to define “harmful” content – and for the state to influence that definition. In fact, the characters pushing for the bill seemed more compelled by protecting Zionism and its proponents than children. Members of the Online Safety Act Network include the Antisemitism Policy Trust, Institute for Strategic Dialogue and the Alliance to Counter Crime Online, all of which have advised OfCom in drafting the bill. The differential impact of the act’s ban illustrates the political motive underpinning the act. Shortly after the act went into effect, the Reddit thread r/IsraelExposed was blocked. Meanwhile, X/Twitter restricted access to footage of a protest that took place in Leeds in defence of asylum seekers in Leeds. While political dissent is getting suppressed, content from the pick-up artist community and child modelling sites remains ostensibly unimpacted.

Second, the bill called for scanning encrypted messaging services in the name of detecting child sexual abuse materials (CSAM), echoing EU regulators’ legislative efforts to regulate private communication, or “chat control”. End-to-end encryption has long been a battleground for human rights defenders as the technology preserves privacy in digital communication, which promotes open communication and protects political dissent. While some bad actors may use encrypted apps to engage in illegal activities, end-to-end encryption is an all-or-nothing technology that cannot be applied selectively, according to tech experts.

There is little evidence that encryption is responsible for CSAM. Despite this, a number of child protection campaigners have supported the act, lending it legitimacy. They are not representative of the child rights sector.

Instead, clinical experts and advocates working on the frontlines of child sexual abuse response emphasise the importance of taking a public health approach by creating non-judgmental environments for children to seek help. They claim the act will severely restrict young people’s access to sexual and reproductive health information and resources, further endangering them. Some research suggests that children who don’t know how to name their body parts are at a higher risk of sexual abuse. The government has put the encryption clause on hold until it magically becomes “technically feasible” to scan private communication.

The new book-banning.

Even if the act’s proponents were interested in protecting children, this would not be the way to do it. The underlying logic of the Online Safety Act is that shielding young people from unsavoury, objectionable or difficult content – rather than teaching them how to engage with it critically – is a necessary act of protection. This rhetoric joins the ranks of book-banning and abstinence-based sex education by infringing on young people’s right to information, privacy, and expression – rights enshrined in the 1989 UN convention on the rights of the child, which the UK has signed.

Today’s moral panic around social media and youth owes much to Jonathan Haidt’s The Anxious Generation, which claims that smartphones and social media have “rewired” children’s brains and exacerbated their mental health. As has been widely pointed out, Haidt is not an expert on child development or online harms, and the scientific community has thoroughly refuted his book for its sensationalism and reactionary undertones. Yet Haidt’s pop psychology book continues to be referenced by Ofcom.

How then to address the various crises – mental health, for one – that our young people are suffering, and which social media appears to contribute to? Well, ask them: in 2024, Crisis Textline, a mental health hotline that provides free, confidential support text in English or Spanish to young people globally, asked its adolescent users what they need to cope with crises. The number one response? More opportunities for social connection, which age verification does nothing but restrict.

A big tech handout.

The real and perhaps only winners of the Online Safety Act are not children, but tech companies.

Take the age verification vendors. As content moderation scholar Eric Goldman writes, platform companies will outsource age verification to specialised vendors in an effort to comply with the act. This means that a small number of age verification vendors will have access to users’ personal data, which they can monetise into consumer profiles. These vendors also stand to gain lucrative contracts with big platform companies and government agencies as the market for digital identity verification expands. Unsurprisingly, the Age Verification Providers Association has been a key driver of the act.

Meanwhile, the tech industry will continue expanding its extraction of our personal data, feeding its surveillance business model that, for example, creates targeted advertising that coerces kids into sports gambling or mines their facial data to train models. An online safety regulation that actually centred young people’s wellbeing would go after the tech industry’s predatory business model for starters.

Ofcom has set a dangerous precedent. There is a wave of child online safety legislation around the world that will be emboldened by the UK’s move. Australia led this wave last year by passing the social media minimum age restriction, which will go into effect this December. In the US, the Kids Online Safety Act was passed in the Senate but voted down by the House last year, though several states have already passed age verification laws, with more set to join. The Malaysian government passed its Online Safety Act in 2024. Canada’s is currently under review.

There is a glaring contradiction here. The preoccupation with online safety, divorced from young people’s real lives, distracts politicians from being held accountable for the ruthless cuts they are making to education, healthcare and domestic violence shelters – things that would actually improve children’s mental health. Child safety is too urgent and serious an issue to be made into a Trojan Horse for authoritarianism. Responsible adults must redirect their moral panic to what actually matters: improving young people’s lives.

Dr Kate Sim directs the children’s online safety and privacy research programme at the University of Western Australia’s Tech & Policy Lab.